One of the best-known theoretical physicist of the 20th century is Stephen Hawking. One thing I particularly admire about him is that, just like Albert Einstein, he has put a tremendous effort in popularizing his work and knowledge. I have recently read his latest book entitled The Grand Design, which I very strongly recommend. Not only has it enlightened me, it has also inspired me a lot. And made me want to continue my work on Science4All…

In his latest book written with Leonard Mlodinow in 2010, Stephen Hawking introduces what seems to me as a philosophical breakthrough: the concept of model-dependent realism. This invention comes at a point of the history of science where several different theories are aiming at being theories of everything (or final theories), that is, the description of the fundamental laws of physics. This has induced a split in the theoretical physics community as displayed in this extract from The Big Bang Theory.

Theories of everything all aim at unifying major theories of physics. In particular, they try to unify the general relativity theory by Albert Einstein and quantum mechanics. Meanwhile, model-dependent realism may well succeed in unifying theories of everything. Let us start by the History of epistemology. This will help understand how the two brilliant theoretical physicist have come up with their concept. We will then explain the concept in details.

History of Epistemology

A simple sentence such as the sun rises every morning has raised a lot of philosophical reflection along the years. Can this sentence be considered as science, or simply as a belief? Or even as metaphysics? Obviously, it is something that we have been observing every morning until now, but how can we generalize this observation to every morning in all time? This relationship between observations and knowledge has become a large problem of philosophy, in particular of epistemology, which is concerned with structuring knowledge.

In fact, thinking that this was a problem was already a major breakthrough in philosophy. Although a few ancient philosophers noticed it, including the Greek scholar Aristotle and the Muslim scientist Alhazen, knowledge had barely been related to observations until the 16th century. At that time, metaphysics and religions were considered as the roots of knowledge, and were transmitted to new philosophers as such. But then came two philosophers whose open-mindedness at such a time is mesmerizing.

I’m going to start with the French philosopher René Descartes. He noticed that philosophers often contradicted each others. This gave him the willingness to doubt everything he was taught, as underlined in the following quotation.

The first was to include nothing in my judgments than what presented itself to my mind so clearly and distinctly that I had no occasion to doubt it, René Descartes.

Once again, this might seem like an obvious thing to say today. However, even though he was not the only one, daring to question the authority of priests and philosophers at that time required an extraordinary open-mindedness (and maybe there’d be less conflicts between communities nowadays if everyone started to doubt his prejudices…). Still, René Descartes believed that metaphysics were the roots of knowledge, as shown by his tree of knowledge (see on the right).

The other great philosopher is the English philosopher Francis Bacon. He is regarded as the father of the scientific method.

Men have sought to make a world from their own conception and to draw from their own minds all the material which they employed, but if, instead of doing so, they had consulted experience and observation, they would have the facts and not opinions to reason about, and might have ultimately arrived at the knowledge of the laws which govern the material world, Francis Bacon.

By saying that, Francis Bacon was creating the bridge between observation and knowledge. This bridge has been taken by all scientists since. It is the main component of what is now known as empiricism. According to empiricism, knowledge should be built from observations. Once again, it sounds obvious nowadays. But, at the time, all the knowledge was assimilated with the teaching of philosophers and priests. Saying that meant questioning the authority.

Indeed. This period is also known as the Copernican Revolution, after the astronomer Nicolaus Copernicus. In 1543, he was claiming that the Earth was not the centre of the universe. On the left of the figure below, you can see with the Copernican model, with the Sun, the Earth and Mars. On the right, the Ptolemaic system is displayed. It was this latter system that was considered as the reality by the Church at that time.

The questioning of authority at that time was not well received. Indeed, The Italian scholar Galileo Galilei fiercely defended Copernicus’ theories, claiming that it was the description of the reality. As a result, sadly, he got sentenced to imprisonment by the Catholic Church in 1633.

That’s a great question, on which the German philosopher Immanuel Kant longly disserted. He distinguished phenomenons from noumenon. While phenomenons are observed by our senses, the noumenon includes existing objects and events that are out of each of our senses. Phenomenons can be deduced with empiricism. But the noumenon is unknowable.

Talking about the noumenon is considered pointless by logical positivism (also known as logical empiricism, scientific philosophy, and neo-positivism). This was introduced in the 1920s by a group known as the Vienna circle. The most important feature of positivism is the concept of verificationism. According to it, scientific knowledge should be restricted to theories which can be verified directly by experiments. Claims which cannot be verified are therefore not relevant to science. Although appealing, this concept was highly criticized by the Austro-British philosopher Karl Popper in the 1930s.

Popper claimed that verificationism was too restrictive. For instance, nowadays, scientists all believe in the existence of electrons, which are fundamental particles known for having a negative electrical charge. Electrons are taught in all courses of physics in all universities of the world. Yet, their existence has not been directly verified. It cannot be observed directly. What we can observe are only the indirect aftermaths of their existence, such as electricity. Eventually, electricity would be quite complicated to explain without electrons, which cannot be assumed to exist if we apply the concept of verificationism.

Yes he did. He introduced falsifiability. According to this concept, any theory which implies observations that can be tested (and can hence be proven wrong) can be considered as a scientific theory. This distinguishes them from metaphysics theories, which imply no observations. Popper’s concept was a philosophical breakthrough. It enabled to consider that complex theories such as relativity theory or quantum theory were scientific theories. But it doesn’t seem to have pleased to Stephen Hawking…

More recently, even more complex theories like string theory and quantum loop theory have developed. These theories imply observations which are harder to test. Some scientists have thus questioned their validity as scientific theories, as displayed in the following extract from The Elegant Universe by NOVA and hosted by Brian Greene.

In particular, Hawking and Mlodinow present the idea of multiverse, according to which our universe is only one in many. The other universes have different laws of physics. Most laws of the universes imply their quick disappearing or the non-existence of intelligent life. This theory of multiverse helps explain why the laws of our universe seem incredibly well suited to our existence, just like the existence of plenty of planets explains why the properties of our planet seem incredibly well suited to our existence. Yet, this theory implies no possible observation. Thus, it would be considered as metaphysics by Popper. That’s one of the reasons why Hawking and Mlodinow introduced the concept of model-dependent realism.

Observations and models

In order to introduce model-dependent realism, I’ll first talk a little bit more about observations and models.

An observation is a measure, which can be made by one of our 5 senses. But since our senses are not that reliable (as well as our brain that’s interpreting the measures of our senses), an observation would rather be done with some measurement device. In fact, for an observation to really be trustworthy, it should be done by several measurement devices at several times. This gives us an important feature of observations: They need to be reproducible. An observation should to be a sentence that starts by stating the conditions under which the measure will be made, and end with the value of the measure. For instance: If you look at the sky with a telescope in the direction where, according to Copernicus model of the universe, Mars should be, and if the sky is dark and cloudless, then you’ll see a red dot.

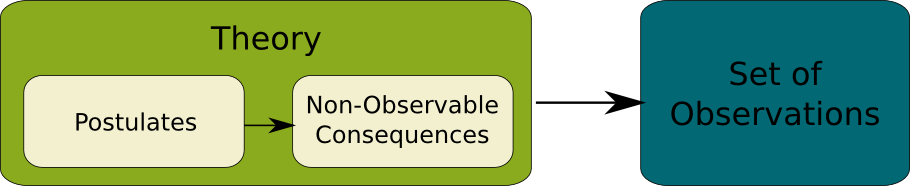

A model is defined by a set of postulates, also known as hypotheses. For instance, the first postulates of Albert Einstein’s special relativity claims that the laws of physics do not depend on the uniform motion. This is known as the principle of relativity. The second postulate asserts that the speed of light in vacuum is the same for all observers in uniform motion. In mathematical terms, these postulates correspond to the axiomatisation of the theory.

Indeed. The consequences are the logical aftermaths of the postulates. For instance, according to the relativity theory, that is, if we assume its two postulates, then we can deduce that the speed of light is the maximum speed in the universe. Making these deductions may be complicated. But if the postulates are simple enough and well formulated, we can use mathematics to do that. Quite often though, the mathematics are too hard for scientists to handle. Simulations are then used to get an approximation of the consequences of the postulates.

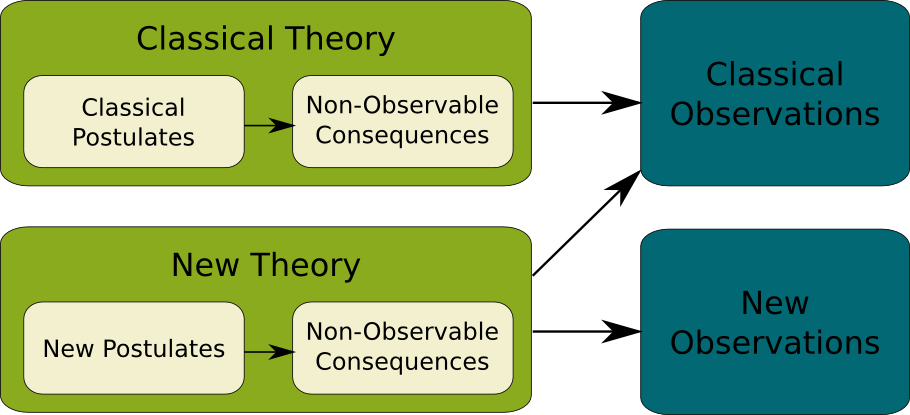

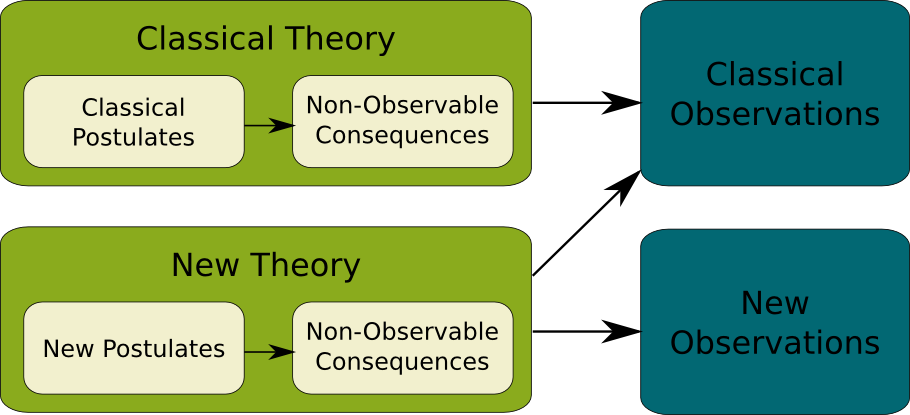

Indeed. Some of the consequences of a theory cannot be observed like the maximum speed in the universe, while others are observable. For instance, special relativity implies length contraction and time dilatation, which is not observable directly. However, time dilatation implies that time on board of satellites orbiting around Earth is dilated. Thus, if we synchronize clocks on Earth and a clock on a satellite, then, after some time, the time shown by the clock on the satellite will be later than the one on Earth. This is phenomenon that we can observe. And we do observe it. In fact, clocks on satellite are frequently adjusted so that they show the actual time on Earth. More generally, a theory implies a set of observations, as shown on the following figure where the arrows mean an implication.

Well, not according to verificationism. Verificationism would not mind about the theory, since the theory cannot be verified (in particular its postulates and non-observable consequences). Falsifiability is better as it says that as long as a theory generates the right set of observations, it can be considered as right. However, we are used to thinking that, if there are two candidate theories, then there will be one which will be proven false by some observation we haven’t made yet, such as in the following graph. From the following graph, we can deduce that the classical theory is false thanks to new observations.

As you can see though, there are several theories which explain classical observations. More generally, a set of observations may be explained by several theories (and thus several sets of postulates), although a set of postulates implies only one set of observations. In mathematical terms, this means that associating sets of postulates to implied sets of observations is not injective, since different sets of postulates may imply the same set of observations.

Now, suppose that we were able to make all observations. Then…

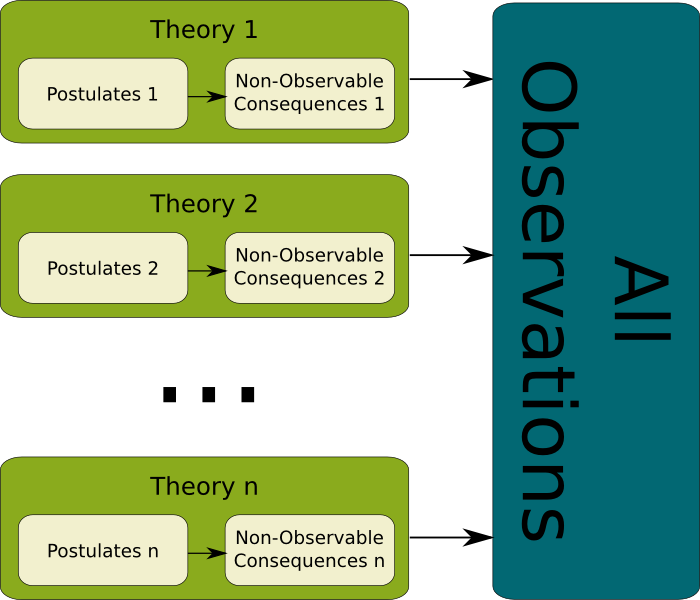

Precisely! Let’s call them accurate theories (this is a concept I came up with, but is not used by Stephen Hawking and Leonard Mlodinov). Accurate theories are displayed in the following figure.

Yes. In fact, constructing one accurate theory would be quite easy, providing we knew all observations. Let’s just postulate the existence of some entity (let’s call it God…) which decides that for any scenario of observation, the measure of the observation is indeed the one that we observe. Let’s call this theory God-decides-every-observation theory. Well, obviously, this God-decides-every-observation theory is accurate.

You could do a more advanced theory (let’s call it classical mechanics) which explains quite a lot of observations. Whenever an observation cannot be explained with this advanced theory, you could invoke God’s work once again. This theory would be accurate too. This is exactly what the famous English physicist Isaac Newton did, when he thought that his theory implied an increasing deviation of the trajectory of Mars by the Earth.

As Kant had foreseen it, we can’t. Of course, if we apply verificationism, we could restrict ourselves to saying that only observations are the reality, but observations are definitely dull things to say. You cannot say that the planet Mars can be seen in the sky right now without considering a theory which claims that Mars exists. All you can say about the observation is that if you look up at the sky right now, then you will see a red dot.

In order to be able to talk about the planet Mars (as well as other objects and events of the universe), Stephen Hawking introduced model-dependent realism. According to this philosophical paradigm, all accurate theories can be considered as true. As a result, their postulates, as well as their non-observable consequences, can be considered as a reality. Each accurate theory implies its reality. The reality of an accurate theory may contradict the reality of another accurate theory. That’s why the reality depends on the considered accurate model.

As almost everyone considers the model where the planet Mars exists, and as this model does not imply any contradiction with the set of observations made so far, our models commonly consider its existence as a postulate, or as a non-observable consequence. According to these common models, the existence of Mars is real. But one could assume that we live in a virtual program where an application displays a red dot in the sky. And I’m pretty sure an accurate theory could be defined based on this reasoning. For this virtual-program theory, Mars is not real. Kind of like in the movie Matrix.

Accuracy and Usefulness

Let’s now consider an accurate theory. For all I know, adding to this theory that the Earth is the centre of all universes leads to no contradiction with observations. Therefore, according to this theory, the Earth really is the centre of all universes, right? Ptolemy and the Catholic Church were thus right, weren’t they? In fact, the geocentric model made by Ptolemy cannot be considered as wrong as opposed to the Copernican system, since they both match observations. Both of them are as right as each other.

There is. For instance, one way of ranking theories frequently used by scientists is the number of postulates. For a long time, scientists have believed that the universe could be explained by a very limited number of postulates. Such theories are thus very attractive. They are considered elegant. They are considered sexy. Newton’s classical mechanics was extremely appealing as it could be summed up in three simple laws. Einstein’s special relativity was even more glamorous, as it only had two postulates. However, there is no reason why the laws of the universe should be that simple.

In particular, Gödel’s incompleteness theorem has created a fear of the possibility that the laws of physics may never be stated with a finite number of postulates. According to this theorem, for any theory that satisfies a small number of properties, there exists a property of the theory which is either inconsistent (that is, can be proven true and false by the theory’s postulates) or incomplete (that is, cannot be proven true nor false by the theory’s postulates).

In fact, in the book The Grand Design, Hawking and Mlodinow highlight the fact that a theory could be defined as a set of more specified theories which only apply in certain cases, as long as, for all observable cases for which several specified theories can apply, the predictions of all specified theories are the same. As a result, Einstein’s relativity theory and quantum theory may not be contradictory. We just need to specify when one can be applied, and when the other can be applied. The book actually explains that the M-theory has managed to gather specified theories so that it can explain all observations made so far.

The main advantage of the Copernican system compared to the Ptolemaic system is not the number of its hypothesis. In fact, both of them have unnecessary hypotheses, that is, we don’t need to assume that the Earth is the centre of the universe nor that the Sun is the centre of the universe to explain observations we have made so far. However, the Copernican system is considered as a better system of than the Ptolemaic system. That’s mainly due to its simplicity. Simplicity is extremely important. That’s why I am giving my best to make science simple. As you can read it on Science4All home page, I invite everyone to make it simple.

The Copernican model is simpler. It’s much easier to understand and visualize the circles described by planets around the Sun, than to understand and visualize epicycles around the Earth. Calculations to make predictions are easier with the Copernican system. For instance, it is obvious with the Copernican system that the distance between Mars and the Sun is nearly constant. But If you want to launch some machine (let’s call it curiosity rover) to visit and study Mars, the Ptolemaic system might in fact be better.

Indeed. A theory can be considered better than another if it is more useful. It’s a major criterion considered in The Grand Design. The God-decides-every-observation theory is a bad theory, because it is useless. For one thing, it’s infinitely long to write it and obviously impossible to read it. It cannot be understood. It’s impossible to make predictions with it (except, maybe, by doing a psychological analysis of God, but, according to Niels Bohr, even Einstein failed in doing that…). On the opposite, a theory like Darwin’s evolution theory is much more efficient and useful for the understanding of the present and past world. Despite Phoebe’s speech…

You can’t prove Darwin’s theory. But it surely is much more efficient to explain the dinosaur bones found all over the world than the God-decides-every-observation theory. It’s not more accurate. But it’s more useful. Even if evolution turns out to be proven wrong some day, it may still be quite useful, just as it’s been the case for Newton’s classical mechanics. In fact, usefulness may matter more than accuracy…

The History of physics show how difficult it is to produce an accurate theory. If you check Thibault’s article on the evolution of the understanding of the forces of nature, you’ll end up wondering if there even is a point in striving to search for an accurate theory. Still, the theories produced are all useful for a better understanding of the laws of the Universe, even though they are not accurate.

Moreover, today, quantum theory is considered as an extremely accurate theory. However, it is pointless to use it to explain why the American people has decided to elect Barack Obama in 2008, nor why you are having so much fun reading this article. Explaining that with quantum theory would require the calculations over hundreds of billions of billions of billions of particles. Managing this calculations would yield an accurate result, but there is no way that today’s computers, which can barely handle millions of billions of data, make the calculations. And there is absolutely no way that a human ever will. Instead, in order to study these phenomenons, it’s much better to have a useful theory (let’s call it psychology), although it won’t be totally accurate.

Observations at the microscopic level show how much particles behave in a very counter-intuitive way. For one thing, the world really seems inherently probabilistic. As a result, any deterministic theory is doomed not to be accurate. In fact, in any field but theoretical physics, it’s hopeless to design accurate theories. Instead, in these other fields, theories should aim at a balance between accuracy and usefulness. The latter condition requires a certain simplicity. Indeed, a theory can’t explain and predict much if it isn’t simple enough.

I believe that model-dependent realism is a huge opportunity for more tolerance. When you are having a debate, you have to think that the other may be just as right as you, even though what he is saying is contradictory to what you are saying. As long as the contradictions mainly concern what’s not observable, both your theory and the other’s theory may be right. And if the other’s theory explains the observations just as well as yours, it’s an opportunity for you to have a whole other understanding of things, which will certainly be very useful for other aspects. I’m sure that if Copernicus were to plan the curiosity rover expedition to Mars, he’d be glad to listen to Ptolemy talking about his model of the universe…

Let’s sum up

I strongly believe that model-dependent realism is an extraordinary breakthrough for epistemology and fundamental science. It’s an extremely logical and accurate approach to the definition of knowledge and reality. With model-dependent realism, we can answer the question: “Do electrons exist?” The answer would be yes for accurate models that consider their existence and that contradicts no observation, and no for accurate models that consider their non-existence like the God-decides-every-observation theory. More than ever, truth is relative. This explains Jeff Winger’s speech from the TV show Community.

Now, the harder question Stephen Hawking and Leonard Mlodinow have tried to answer was: “Does God exist?” The way they answered the question was by using the same reasoning as the apocryphal answer of Pierre-Simon Laplace to Napoleon Bonaparte, when the latter asked the former if he believed in God. Pierre-Simon Laplace answered:

“I had no need of that hypothesis.”

I actually doubt that Laplace managed to explain the world without this hypothesis, given how hard it was for Hawking and Mlodinow to do so. In particular, many scientists believe that God might have played a role when fixing fundamental parameters of the universe, such as the number of dimensions. As they noticed that the number of possible values of these fundamental parameters is finite, based on the M-theory, Hawking and Mlodinow considered a theory in which there was one universe for each possible value of the universes. All these possible universes exist, forming the multiverse. But they hardly interact with each other. Our universe is as such because it’s one of the few ones that an intelligent form of life could inhabit. If the theory of multiverses yields no mistaken prediction, then it will be an accurate theory. Also, it will be assuming the non-existence of God. Thus, God does not exist in this accurate theory.

According to model-dependent realism, it depends on which accurate theory is considered.

Very nice article !

I was easy to eat it =)

However, it makes me think that research will come to an end one day because of the possibility of the creation of as many accurate theories as you need… Is that right ?

Bisous

I think the world is complicated enough, so that a great great great number of very different theories (accurate or not) may all be useful, depending on the studied cases. And even then, they will need to be well structured and understood so that everyone can easily know which one to choose for which case. Maybe the human mind is not powerful enough to do that… in which case research may go on and on.

Anyways, it will be a long time before we reach the understanding of most useful theories… Research still has a lot lot to do…

Hmm, does God exist?

James Dunn postualates something called quantum entangled systems in his book on building universes.

He sets up a system based on quantum causality, the independent causality associated with a quantum event.

He doesn’t say that God exists, but he describes the potential of a cognitive control to stabilize an unstable characteristic of causality so that though deterministic, the causal systems and their magnitude assertions evolve consistent with physics. So God would be a control function not much concerned with our well being.